Code Red at OpenAI: Strategy Meets Technology at the Inflection Point of Global AI Competition.

OpenAI’s crisis is not about moving too fast or too slow—it’s about strategy and technology accelerating in different directions.

OpenAI has entered what many insiders describe as a “Code Red” moment—a period where strategic uncertainty collides with unprecedented technological velocity. The phrase is not hype. It captures a profound misalignment emerging inside one of the world’s most influential AI companies: the divergence between its founding mission, its commercial reality, the accelerating global competition, and the cascading consequences of its own breakthroughs.

This crisis is emblematic of a broader reality facing modern enterprises:

Technology without strategy is just potential. Strategy without technology is just a plan. True transformation only occurs where they meet.

OpenAI is not the first technology organization to face this dilemma, but it is certainly the most consequential. What happens at OpenAI in the next 12–36 months will shape global AI innovation, geopolitical positioning, regulatory trajectories, and the competitive landscape across industries.

Let’s examine the roots of OpenAI’s Code Red, the intensifying global competitive dynamics—including advances from China and Google’s Nano/Banana architecture—the rising challenge of the feature/function arms race, and the path toward reintegrating governance, mission, and capability. It concludes with actionable strategic insights for executives navigating similar pressures in their own organizations.

The Origins of the Code Red: A Mission Strained by Market Reality

OpenAI began with a bold, idealistic intention: to ensure that artificial general intelligence benefits all of humanity. Its capped-profit model was designed to keep commercial incentives subordinate to safety and public interest. But as breakthroughs became more powerful and investments ballooned, this delicate balance began to falter.

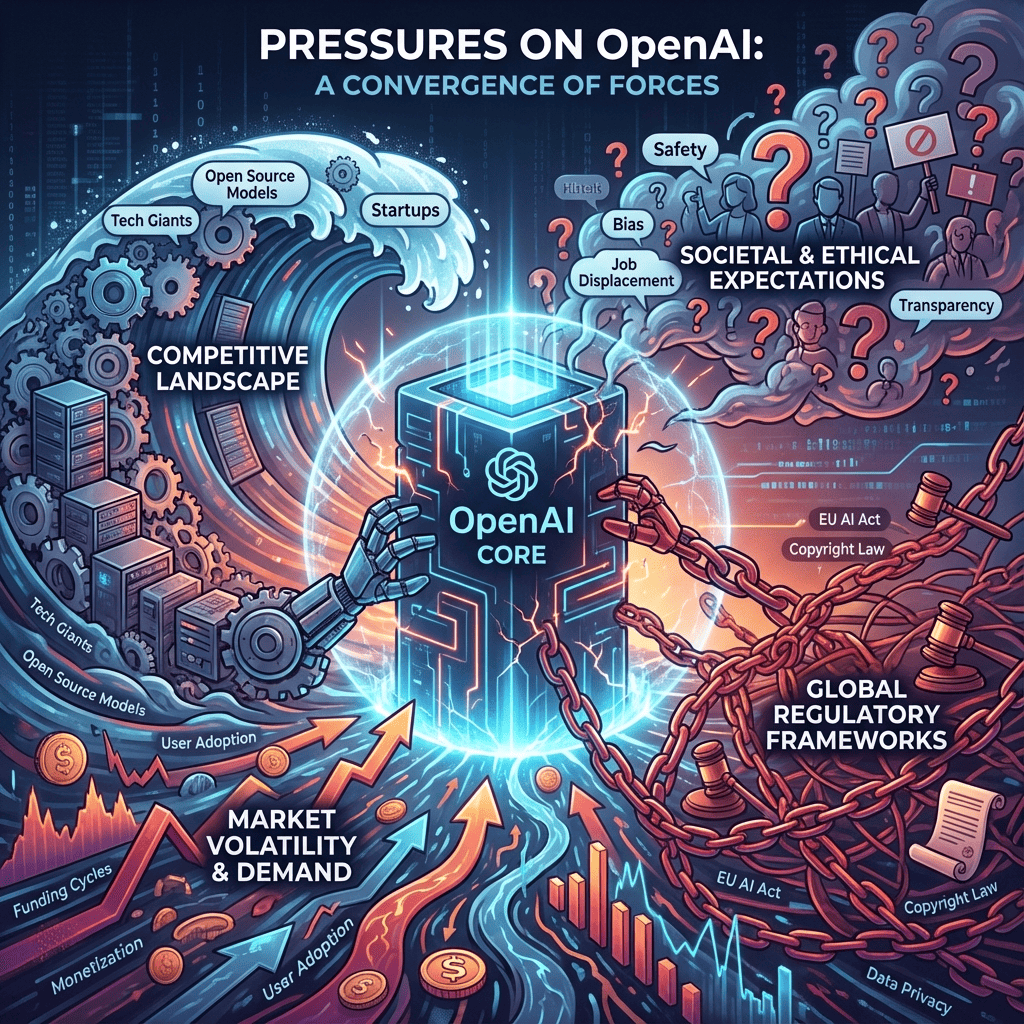

Today’s Code Red environment is shaped by four converging forces.

- Technological Acceleration Outrunning Governance

Each leap in capability—GPT-3, GPT-4, GPT-4o, Sora, o1 reasoning models—compresses the time OpenAI has to evaluate risks, align stakeholders, perform safety testing, and integrate guardrails.

The research ethos of OpenAI was built on the assumption of slower iteration cycles. But the market’s expectations are vastly different:

- Enterprise customers want continuous performance gains

- Developers want more modalities, more autonomy, and more context length

- Competitors release feature-parity updates within weeks

- Investors demand velocity, ubiquity, and platform dominance

This creates structural strain: the governance and innovation clocks no longer run at the same pace.

- Commercial Success Has Created Paradoxes

The more OpenAI succeeds commercially, the harder it becomes to maintain the protective distance implied by its mission. OpenAI now sits simultaneously as:

- A research lab

- A commercial product company

- An infrastructure platform provider

- A geopolitical actor

- A de facto standard-setter

With each breakthrough, risk increases, scrutiny intensifies, but incentives to accelerate also grow stronger. This is a classic strategic paradox: commercialization amplifies the high risks the mission was designed to control.

- Safety Imperatives Compete With Competitive Imperatives

Safety researchers urge caution; commercial teams push toward shipping; customers demand reliability; regulators demand transparency; competitors demand speed.

The result is not dysfunction—it is misalignment.

And misalignment at scale creates existential pressure.

- Regulatory Uncertainty Widens the Gap

Governments around the world are struggling to keep pace with AI innovation. This means OpenAI is not simply reacting to regulation—it is helping shape it, all while facing scrutiny for being both an innovator and a gatekeeper.

The stakes are global:

- Misinformation

- Labor market disruption

- National security implications

- Scientific acceleration

- Synthetic media governance

- Data sovereignty

The more powerful the technology becomes, the more consequential every decision becomes. This intensifies internal strain.

The New Competitive Reality: China, Google, and the Global AGI Race

OpenAI’s Code Red cannot be understood without acknowledging the rapidly expanding competitive pressures—especially those emerging from China’s foundational model ecosystem and Google’s Nano/Banana architecture.

Together, these forces transform OpenAI’s challenge from a domestic rivalry into a global technology race with deep strategic implications.

- China’s Foundational Models: A Parallel and Accelerating Track

It is now clear that China is no longer trailing the West in AI capability—it is building a parallel AGI stack at extraordinary speed.

Leading players include:

- Alibaba Qwen – extraordinarily capable multilingual models, strong long-context performance

- Baidu ERNIE – rapid advancements in multimodal research and tool-use alignment

- Zhipu / GLM – open-weight models optimized for efficiency, control, and enterprise use

- Tencent Hunyuan – powerful multimodal systems integrated into WeChat and cloud ecosystems

China’s acceleration is driven by:

- National strategic mandates supporting compute and experimentation

- High-volume enterprise use cases that stress-test models at scale

- A regulatory environment that, while strong, is more permissive of rapid prototyping

- A massive domestic market enabling rapid product/market feedback loops

For OpenAI, this means slowing down for safety has strategic consequences—it risks ceding leadership, not just market share.

From a geopolitical standpoint, this is the real Code Red:

If OpenAI’s governance model introduces friction and China accelerates, global power dynamics shift in real time.

- Google’s Nano/Banana Models: A Quiet but Devastating Disruptor

While GPT-4 and Gemini Ultra occupy the public imagination, Google’s Nano and Banana model families represent a tectonic shift:

Nano:

- Ultra-efficient, on-device models

- Latency near zero

- Exceptional multimodal capability

- Runs privately, without cloud dependency

- Distributed automatically to billions of devices

Banana:

- Mid-scale models providing “80% of flagship performance at 10% of the cost.”

- Optimized for memory, energy, and controlled autonomy

- Perfectly suited for edge intelligence, agentic workflows, and embedded systems

Together, Nano and Banana present a strategic threat:

- Ubiquity outcompetes raw capability

- On-device models compress cost curves

- Tight Android + Search integration gives Google instant global distribution

- Developers can build apps without ever touching OpenAI’s API

This raises a critical question:

What happens when the world’s largest mobile OS and search engine bundle their own models as default infrastructure?

OpenAI’s competitive pressure does not just come from better models—it comes from superior distribution.

The Feature/Function Arms Race and the Rise of Competitive Parity

Every major AI lab now faces the same dual reality:

- Innovation is accelerating.

- Differentiation is decelerating.

This has led to what can only be described as a feature/function arms race—a rapid-fire cycle where every major release is matched, mimicked, or surpassed within weeks.

- Former Differentiators Are Now Table Stakes

Capabilities once considered extraordinary are now baseline expectations:

- Multimodal understanding

- High-fidelity image/video generation

- Long context windows

- Agents and tool use

- Structured reasoning

- Latency improvements

- Efficient fine-tuning

- On-device inference

Parity is becoming pervasive. This undermines the foundation of traditional competitive advantage.

- Feature Velocity Produces Diminishing Strategic Returns

The market is normalizing around “good enough” across the top 5–6 models.

Gains at the frontier remain extraordinary, but the real-world distance between models is narrowing.

Differentiation increasingly depends on:

- Governance and safety

- Reliability

- Price-performance efficiency

- Deployment flexibility

- Enterprise support

- Ecosystem design

- Trust

In other words, platform strategy now matters more than raw capability.

- The Barrier to True Differentiation Is Rising Exponentially

To “escape the arms race,” a company must introduce paradigm shifts:

- Entirely new model classes

- Orders-of-magnitude efficiency breakthroughs

- Autonomous agent orchestration layers

- Hybrid embodied + digital systems

- Dominant distribution channels

This is why OpenAI’s Code Red is not simply about technology—it’s about the widening chasm between breakthroughs and breakaways.

The Strategic Failure Mode: When Strategy and Technology Decouple

Most companies fail not because they lack innovation but because they misalign their strategy, governance, and operating model with the speed and stakes of their technology.

At OpenAI, three failure modes threaten that alignment.

- Governance Drift

The governance model designed for a smaller, slower, mission-driven organization is struggling to keep pace with:

- Multi-billion-dollar investment flows

- Enterprise platform expectations

- Government partnerships

- Global risk complexity

- Weekly feature releases

Governance must evolve to match the new operational reality.

- Mission Ambiguity

“Benefit all of humanity” is a noble vision—but too broad to operationalize.

Without measurable standards:

- Product decisions drift

- Safety becomes subjective

- Research agendas fragment

- Teams work under inconsistent assumptions

Mission without metrics produces noise, not clarity.

- Organizational Friction

Safety wants slower.

Engineering wants faster.

Product wants to be simpler.

Partnerships want to be more open.

Regulators want more transparency.

Enterprises want more control.

These competing imperatives are predictable, but without structure, they create disorder.

The result is not chaos—it is strategic incoherence.

The Path Forward: Reintegration of Strategy and Technology

OpenAI’s challenge is not technical maturity. It is strategic maturity.

The path out of Code Red requires a deep reintegration across four pillars.

- Embedded Governance: Safety as Architecture, Not Oversight

Safety cannot remain an “after-build” review process. It must be engineered into every layer:

- Training pipelines

- Evaluation frameworks

- Deployment systems

- Agent tool-use layers

- Real-time monitoring circuits

This requires:

- Hard technical checkpoints

- Dynamic risk scoring models

- Automated misuse detection

- Circuit breakers for emergent behavior

- Audit-friendly reasoning traces

Embedded governance does not slow innovation—it enables sustainable innovation at scale.

- Transparent Coalition-Building: Collaborate to Shape Global Guardrails

OpenAI must deepen collaboration with:

- Regulators

- International policy bodies

- Academic institutions

- Industry ecosystem partners

- National security stakeholders

Key commitments include:

- Regular technical briefings

- Capability forecasting

- Open evaluation datasets

- Support for independent audits

- Public transparency dashboards

In an era of geopolitical AI competition, trust becomes a strategic asset, not a compliance requirement.

- Operationalize the Mission: Make “Benefit to Humanity” Measurable

To anchor decisions, OpenAI must define quantifiable metrics across:

Societal Benefit

E.g., deployments in education, public-sector transformation, and scientific discovery.

Safety

Incident rates, red-team benchmarks, alignment drift scores.

Equity

Global access, pricing parity, and community impact reports.

Governance

Audit cadence, model transparency, compliance thresholds.

What gets measured gets managed.

What gets managed shapes the future.

- Build an Operating Model Where Strategy and Technology Co-Evolve

OpenAI must mature into a dual-operating system:

Exploration Track

Long-horizon AGI research, careful release pacing, and scientific rigor.

Exploitation Track

Enterprise-grade products, rapid iteration, operational excellence.

Supporting both requires:

- Scenario planning

- Cross-functional alignment

- Clear escalation protocols

- Hardware/compute strategy

- Security and risk architecture

- A board with domain-diverse expertise

This is how to ensure that innovation velocity and governance integrity become complementary, not conflicting.

Strategic Lessons for Every Executive

OpenAI is experiencing in extreme form what every enterprise will face as AI becomes core infrastructure.

- Strategy must be dynamic, not annual.

Technology cycles move too quickly for a static strategy.

- Governance must be embedded, not appended.

Responsibility is an engineering problem.

- Mission must be measurable, not rhetorical.

Ambition without metrics is drift.

- Organizational structure must reflect dual futures.

Exploit today. Explore tomorrow.

- Trust is the new competitive moat.

Transparency accelerates adoption; opacity erodes it.

The Real Code Red Is Misalignment

The crisis at OpenAI is not simply about the speed of AI development.

It is about misalignment between:

- Mission and commercialization

- Breakthroughs and guardrails

- Safety and scale

- Competition and governance

- Technology and strategy

When technology outpaces strategy, organizations lose control.

When strategy outpaces technology, organizations lose relevance.

When the two move together, transformation becomes possible.

OpenAI’s path forward—and the path forward for all enterprises navigating AI—is to engineer strategy and technology as interdependent systems, not competing agendas.

Code Red is not a warning; it is an opportunity—the moment when discipline, design, and intention redefine the future.

Article Sources:

- McKinsey Global Institute (2024) – The Economic Potential of Generative AI and Global Competitive Shifts.

- Stanford HAI AI Index Report (2024–2025) – Global AI Capabilities Benchmarking.

- *NIST AI Risk Management Framework (2023–2024 updates)

- OECD AI Policy Observatory (2024) – Regulatory Landscape for Advanced AI Systems.

- Gartner Emerging Tech Radar (2024–2025) – Agentic Systems and Foundation Model Evolution.

- MIT Technology Review (2024–2025) – China’s Rapid Rise in Foundation Model Development.